Attention¶

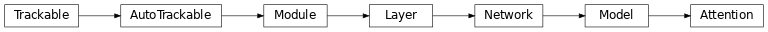

Inheritance Diagram

-

class

ashpy.layers.attention.Attention(filters)[source]¶ Bases:

tensorflow.python.keras.engine.training.ModelAttention Layer from Self-Attention GAN [1].

First we extract features from the previous layer:

\[f(x) = W_f x\]\[g(x) = W_g x\]\[h(x) = W_h x\]Then we calculate the importance matrix:

\[\beta_{j,i} = \frac{\exp(s_{i,j})}{\sum_{i=1}^{N}\exp(s_{ij})}\]\(\beta_{j,i}\) indicates the extent to which the model attends to the \(i^{th}\) location when synthethizing the \(j^{th}\) region.

Then we calculate the output of the attention layer \((o_1, ..., o_N) \in \mathbb{R}^{C \times N}\):

\[o_j = \sum_{i=1}^{N} \beta_{j,i} h(x_i)\]Finally we combine the (scaled) attention and the input to get the final output of the layer:

\[y_i = \gamma o_i + x_i\]where \(\gamma\) is initialized as 0.

Examples

Direct Usage:

x = tf.ones((1, 10, 10, 64)) # instantiate attention layer as model attention = Attention(64) # evaluate passing x output = attention(x) # the output shape is # the same as the input shape print(output.shape)

Inside a Model:

def MyModel(): inputs = tf.keras.layers.Input(shape=[None, None, 64]) attention = Attention(64) return tf.keras.Model(inputs=inputs, outputs=attention(inputs)) x = tf.ones((1, 10, 10, 64)) model = MyModel() output = model(x) print(output.shape)

(1, 10, 10, 64)

[1] Self-Attention Generative Adversarial Networks https://arxiv.org/abs/1805.08318 Methods

__init__(filters)Build the Attention Layer. call(inputs[, training])Perform the computation. Attributes

activity_regularizerOptional regularizer function for the output of this layer. dtypedynamicinbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras. inputRetrieves the input tensor(s) of a layer. input_maskRetrieves the input mask tensor(s) of a layer. input_shapeRetrieves the input shape(s) of a layer. input_specGets the network’s input specs. layerslossesLosses which are associated with this Layer. metricsReturns the model’s metrics added using compile, add_metric APIs. metrics_namesReturns the model’s display labels for all outputs. nameReturns the name of this module as passed or determined in the ctor. name_scopeReturns a tf.name_scope instance for this class. non_trainable_variablesnon_trainable_weightsoutbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras. outputRetrieves the output tensor(s) of a layer. output_maskRetrieves the output mask tensor(s) of a layer. output_shapeRetrieves the output shape(s) of a layer. run_eagerlySettable attribute indicating whether the model should run eagerly. sample_weightsstate_updatesReturns the updates from all layers that are stateful. statefulsubmodulesSequence of all sub-modules. trainabletrainable_variablesSequence of variables owned by this module and it’s submodules. trainable_weightsupdatesvariablesReturns the list of all layer variables/weights. weightsReturns the list of all layer variables/weights.